Overview

Engineers are often faced with the challenge of pulling together multi-website/application projects, without full cross-platform permissions. Utilizing both a Node and an Express proxy, can pull together two websites/applications in a formal and cohesive format.

The aforementioned situation comes up more often than one would think, whether it be a question of host permissions or compatibility, it can undoubtedly pull up a number of possible roadblocks. There are several reasons a developer might not be able to run a site or app in their local environment; perhaps it’s complex to set up or involves a number of permissions. Regardless of the reason, Node and Express can present a novel way to solve the problem.

In Matt Billard’s Lightning Talk session, we will be uncovering the the primary strategies for Creating An Effective Proxy Using Node And Express:

- Overview

- The Architecture

- How It Works

- “Gotchas” To Avoid

- Live Demonstrations

- Closing Thoughts

The Architecture

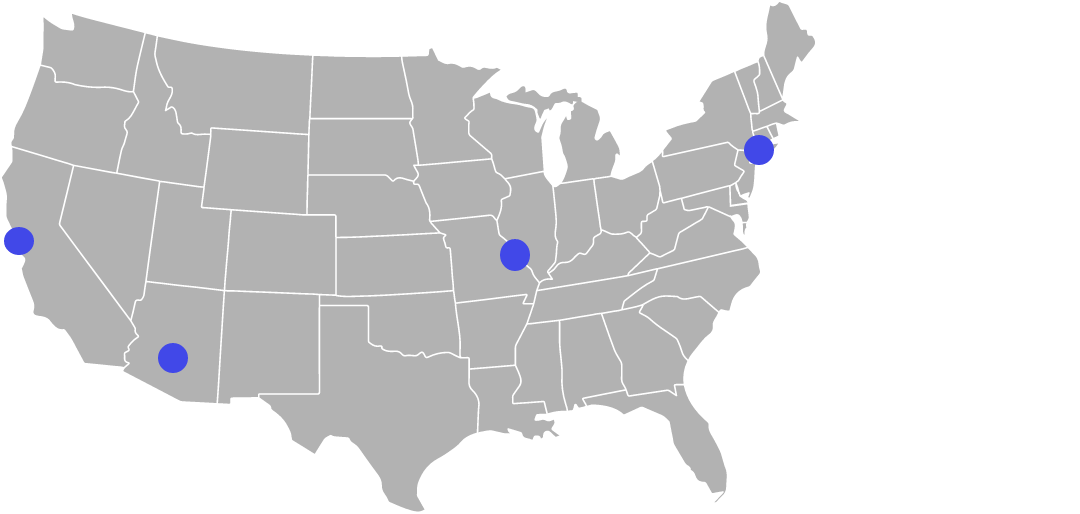

The browser makes a request to the Node Express proxy server where the following three scenarios crop up:

- If the user requested an HTML page, we need to combine the page from website 1 and 2. The proxy first asks website 1 and then website 2 for its HTML. It then modifies the two HTML files, combines them, and returns the result to the browser. (Details on how this works below.)

- The HTML page will then request the CSS, JavaScript, and other assets it requires. These requests will again go through the proxy which will pass on the requests. If website 1 has the asset, great, the proxy will return it to the browser.

- If website 1 does not have the asset, the proxy will then ask website 2 and return it to the browser.

In the example below, when using the InRhythm.com website as the target into which an engineer can inject some local code (in this case a basic Create React App project); the final result is an actual screenshot of the 2 websites living together in the same browser window.

How It Works

As mentioned above, website 1 and 2’s HTML are combined. This involves a few steps. Webpages can’t have 2 doctype, html, head, or body tags, so the use of some regex to strip those, will be required. Now that website 2’s HTML is ready, a coder can inject it before website 1’s closing </body> tag.

The above code shows the modifications to website 1’s HTML.

It shows a few things:

- Many websites have full ‘absolute URLs’ for their links. They look like this: https://www.inrhythm.com/who-we-are/. The problem is if the user clicks on this, they’ll be taken away from our proxy and go to the target website. One can solve this by removing all www.something.com pieces while retaining the language the after the slash.

- Injecting the CSS as discussed above, removes backgrounds and allows clicks to pass through website 2 to website 1. (Keep in mind this will probably be slightly different depending on the two sites a coder is combining.)

- Injecting the HTML from website 2 must be modified before closing the </body> tag.

“Gotchas” To Avoid

It can take a variety of trial and errors in order to develop a proxy to one’s exact specifications. Some of the most common occurrences, a coder may find themselves troubleshooting are:

- Websites usually compress or “Gzip” their content. Normally this is a great thing. It means less data is transferred and websites load more quickly. However, when in need of a proxy, it can become quite troublesome for ease of use. An engineer can’t parse, manipulate, and modify HTML if it looks like gibberish. The solution is actually quite simple: as it turns out, there’s a header one can send with their request to ask the server not to Gzip anything.

- When using a proxy, all requests are going to have the header “host” set to “localhost.” Now this is probably not a problem for most sites, but to the target server, this doesn’t look like a very normal request, and indeed, one will find some websites responded abnormally and will return pages that looked nothing like expected. The solution cab be found in modifying one of the headers of the request.

- Due to needing to modify requests quite a bit, this may result in some possible browser abnormalities. The solution to this problem is to delete the ‘content-length’ header before the proxy sends the browser any final response. This will stop the browser from truncating the response and removing all the hard work needed to customize one’s proxy.

- When combining sites that use https, the proxy might complain that the SSL certificates don’t match what it’s expecting. Turns out it’s rather easy to relax this with the following code:

Live Demonstrations

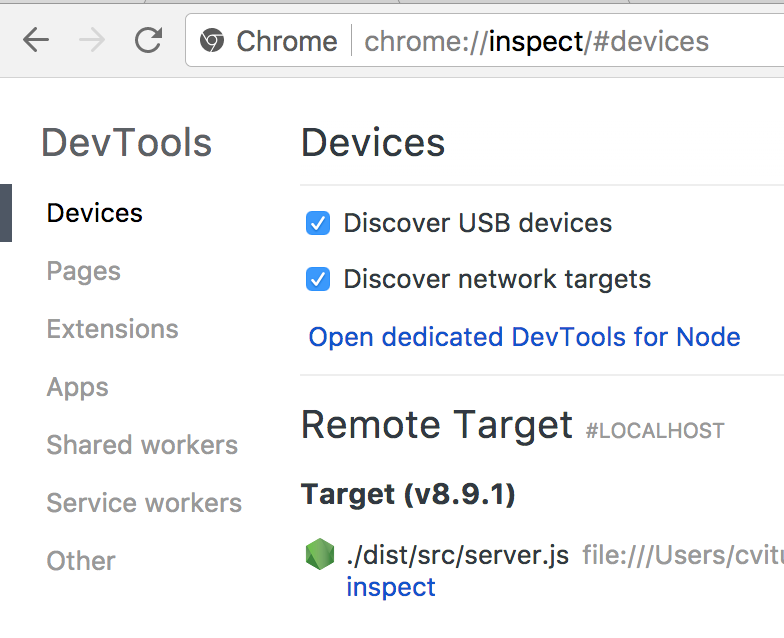

Matt Billard has crafted an intuitive demonstration to help guide you through these principles in practicum:

Be sure to follow Billard’s entire Lightning Talk to view this impressive demonstration in real time.

Closing Thoughts

The Node.js framework Express allows an engineer to create web servers and APIs with minimal setup. Using Express in a Node.js application to create an API Proxy to request data from another API and return it to a consumer, is a vital skill to add to one’s skills toolkit. Using Express middleware to help optimize the API Proxy, will allow a coder to raise the bar and improve performance for returning data from the underlying API.

To develop and learn from Billard’s signature “Code Collider” proxy, feel free to download the direct code from GitHub.

Happy coding!

To learn more about Creating An Effective Proxy Using Node And Express, along with some live test samples, and to experience Matt Billard’s full Lightning Talk session, watch here.