Based on a Lightning Talk by: Ankitha Dhanekula, Senior Software Engineer @ InRhythm on December 7th, 2023

Overview

OAuth 2.0, a protocol for authorization, has evolved into a cornerstone of modern software security.

This article delves into the cutting-edge enhancements of OAuth 2.0, showcasing its transformative impact on how teams approach securing code. We’ll explore active examples that highlight its enhanced application and how it reshapes security paradigms in software development:

- Overview

- Understanding OAuth 2.0

- PKCE (Proof Key For Code Exchange)

- JWT (JSON Web Tokens) As OAuth Tokens

- Enhanced Application In Real-World Scenarios

- Reshaping Security Approaches

- Closing Thoughts

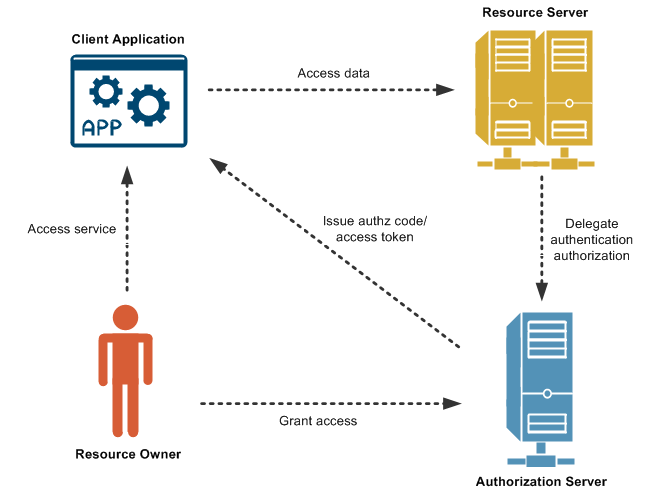

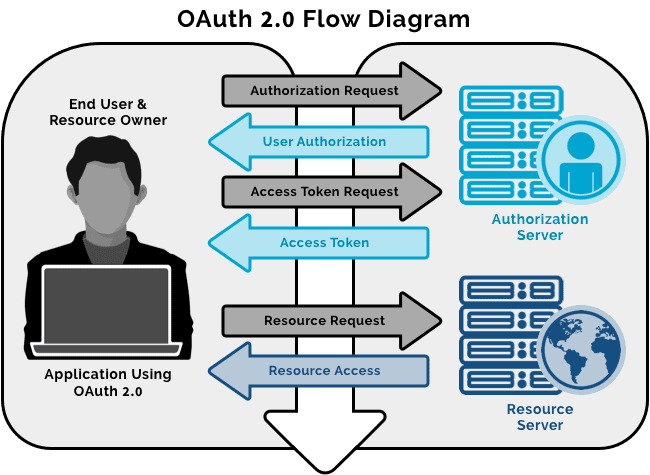

Understanding OAuth 2.0

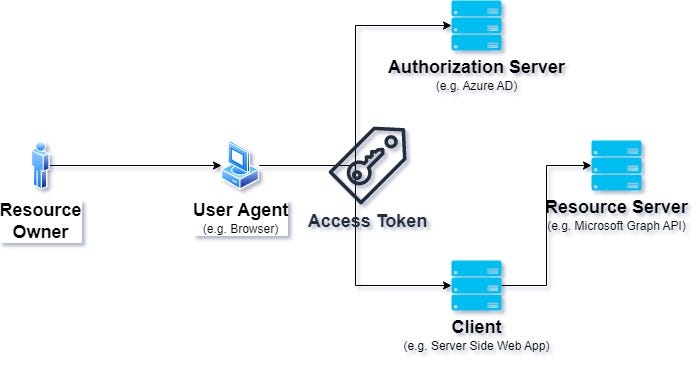

OAuth 2.0 is an open standard for access delegation that allows one service to access resources on behalf of another. It operates over HTTPS and has become the de facto standard for securing APIs.

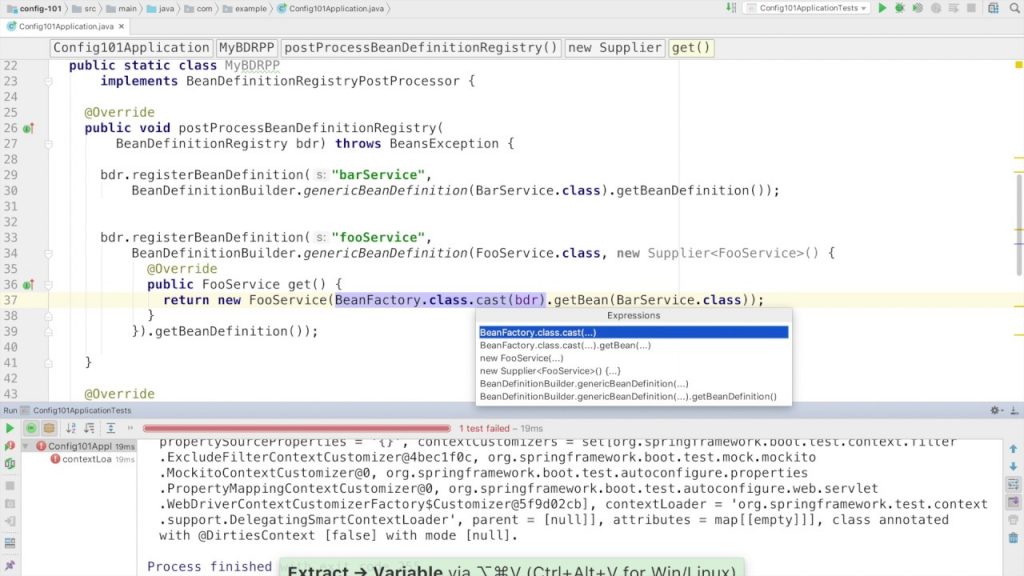

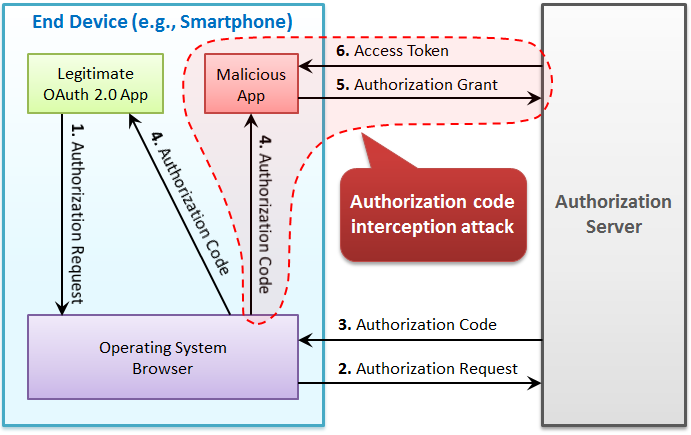

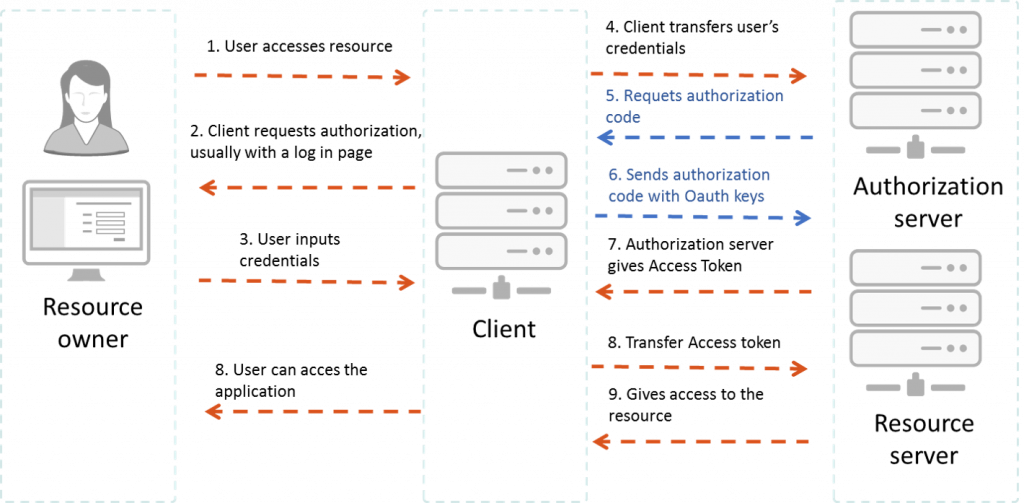

PKCE (Proof Key For Code Exchange)

Traditional OAuth 2.0 authorization flows were vulnerable to interception attacks, especially on mobile and native apps. PKCE mitigates this risk by ensuring that the authorization code returned to the app can only be exchanged by the legitimate possessor of the initial request.

Example:

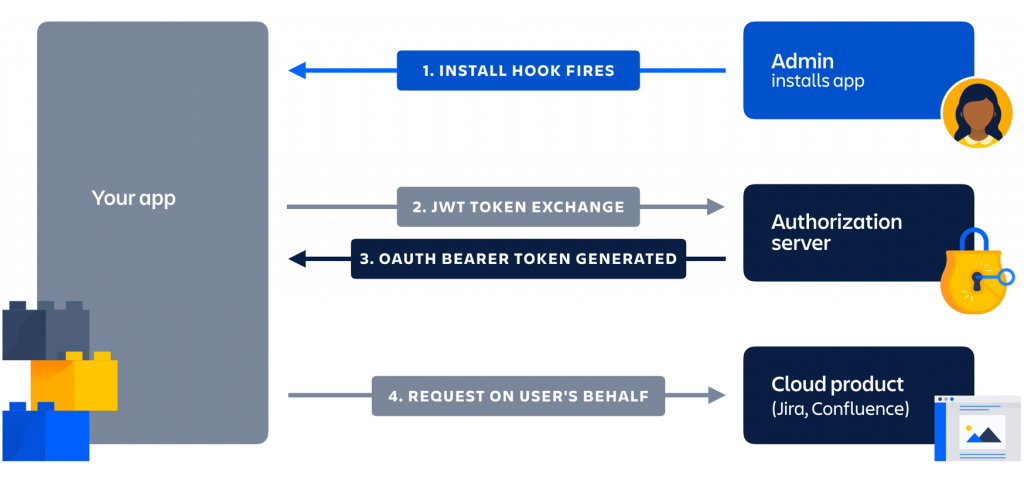

JWT (JSON Web Tokens) As OAuth Tokens

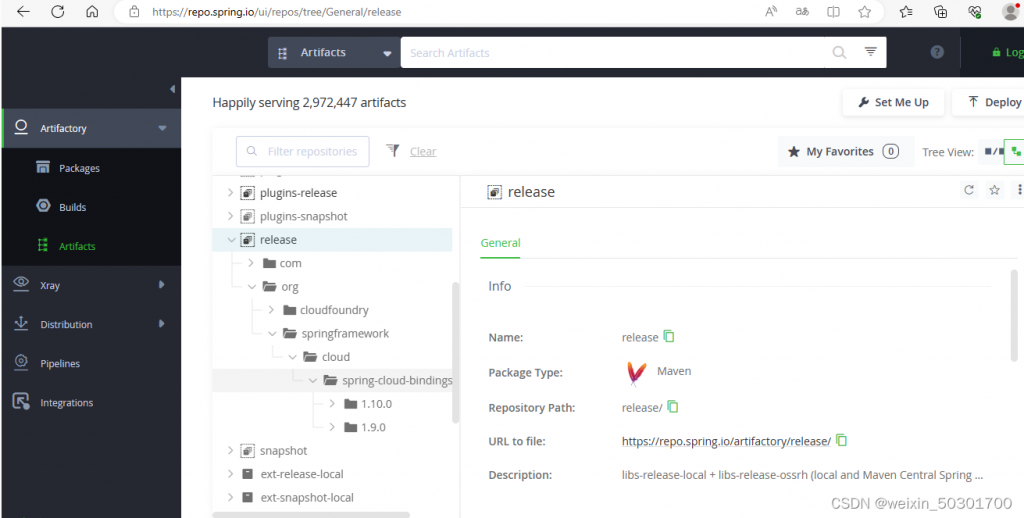

OAuth 2.0 now embraces JWT as a token format, providing a self-contained and compact way to represent information between two parties.

Example:

Enhanced Application In Real-World Scenarios

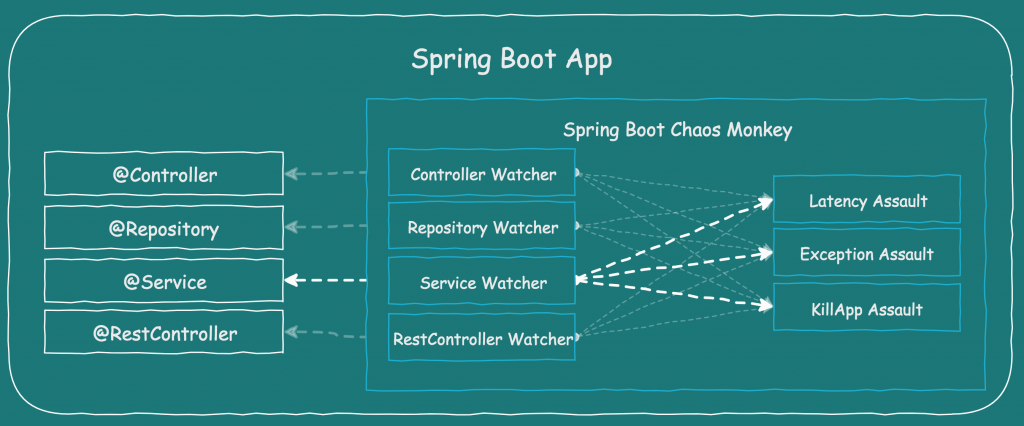

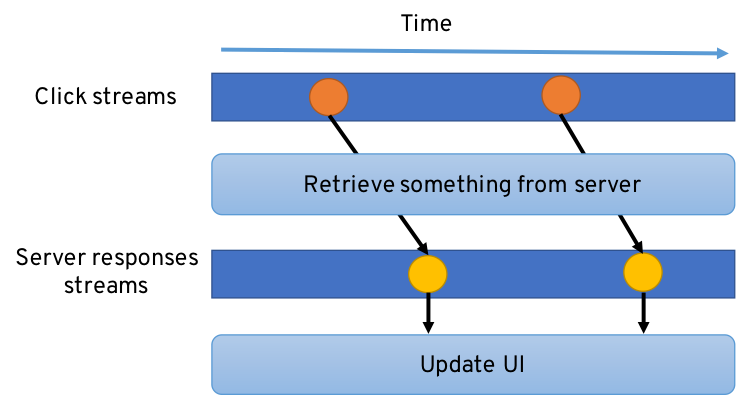

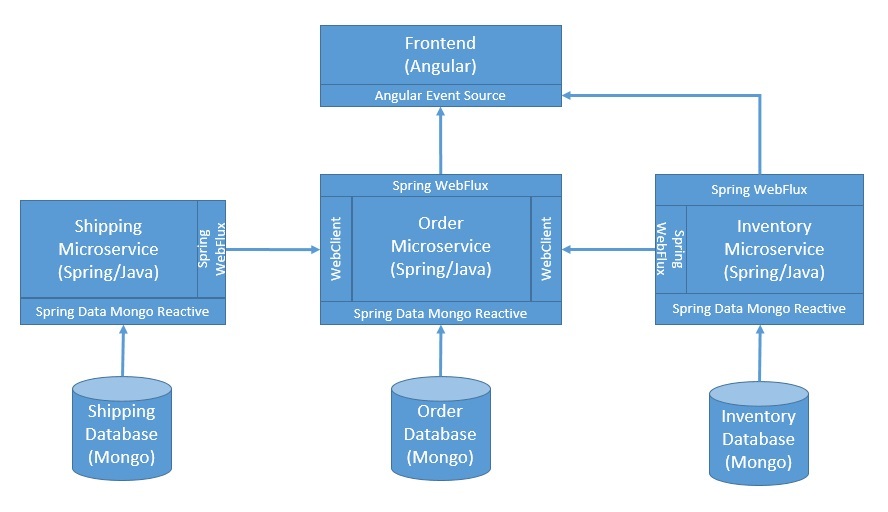

- Securing Microservices Communication

OAuth 2.0 plays a pivotal role in securing microservices communication. By implementing OAuth 2.0, teams can establish a robust security layer between microservices, ensuring secure data exchange.

Example:

- Microservice A requests access to Microservice B’s resources.

- OAuth 2.0 tokens validate the authenticity and permissions of the request.

- Mobile App Authorization

OAuth 2.0 has become the go-to solution for securing mobile app authorization. Mobile apps can obtain secure access to user data without exposing user credentials.

Example:

- Mobile app requests authorization from a user.

- OAuth 2.0 enables the app to access protected resources on behalf of the user.

Reshaping Security Approaches

- Focus On Delegated Authorization

OAuth 2.0 encourages a paradigm shift towards delegated authorization, where applications can act on behalf of users without exposing sensitive information.

Example:

Third-party applications can access specific resources on behalf of a user without exposing login credentials.

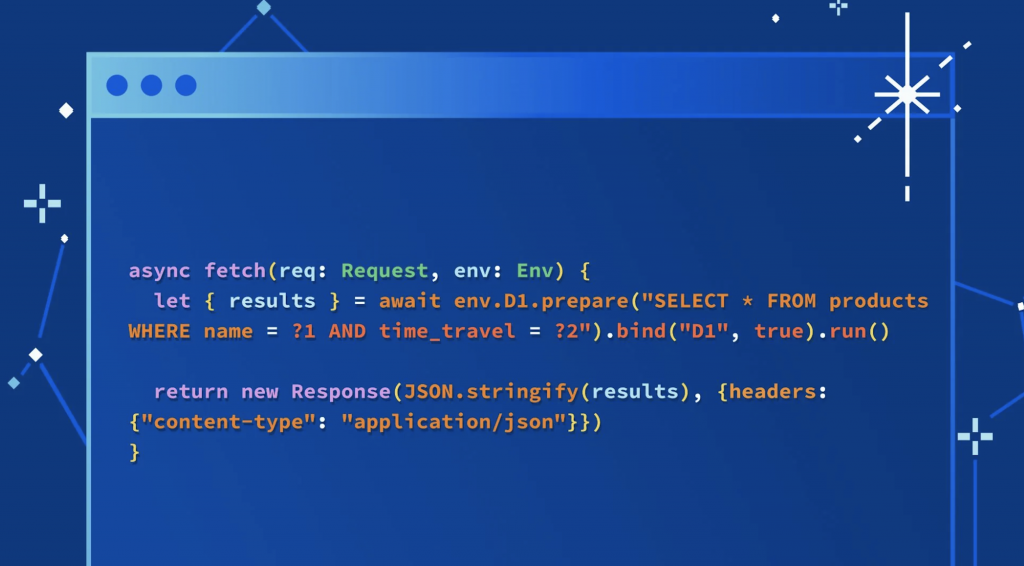

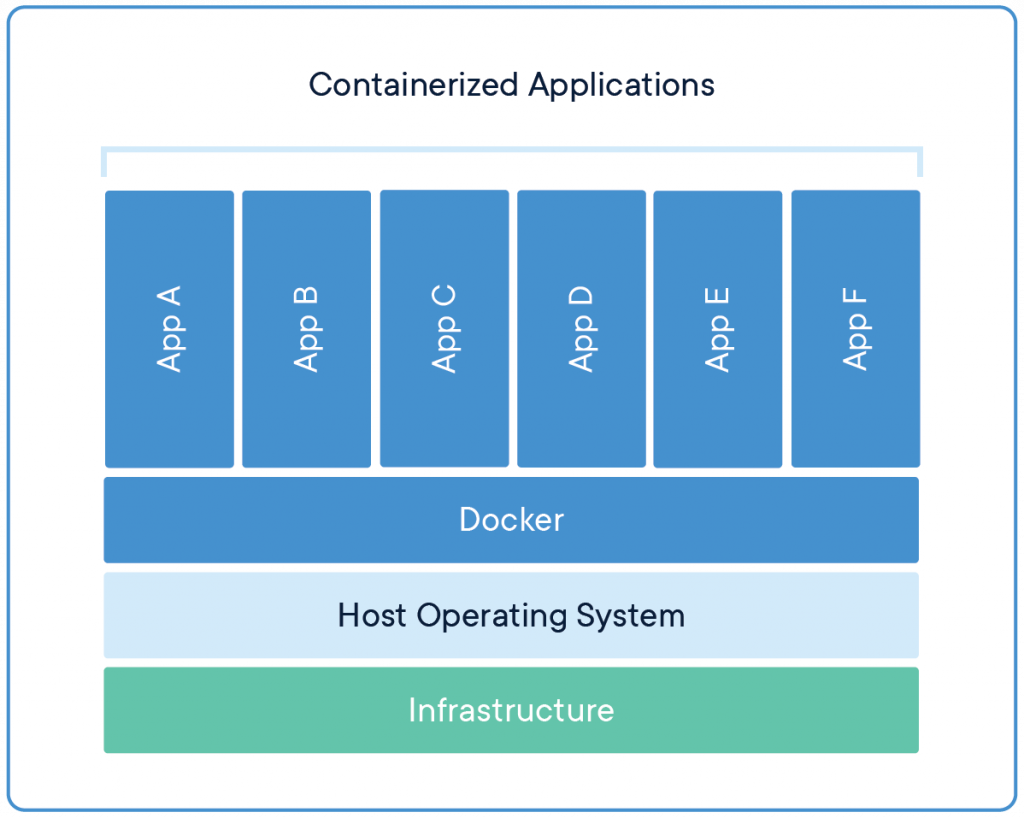

- Scalable Authorization For Modern Architectures

In the era of microservices and serverless architectures, OAuth 2.0 provides a scalable and decentralized approach to authorization, adapting seamlessly to modern software landscapes.

Example:

OAuth 2.0 enables secure communication between serverless functions or microservices within a distributed system.

Closing Thoughts

OAuth 2.0’s cutting-edge enhancements propel it into the forefront of software security. From mitigating interception risks to enabling secure mobile app authorization, OAuth 2.0 is a versatile tool for securing modern software architectures.

Teams embracing OAuth 2.0 are not just adopting a security protocol; they are reshaping how security is approached, ensuring scalability, flexibility, and robust protection against evolving threats.